The Dawn of Computing: Early Processor Beginnings

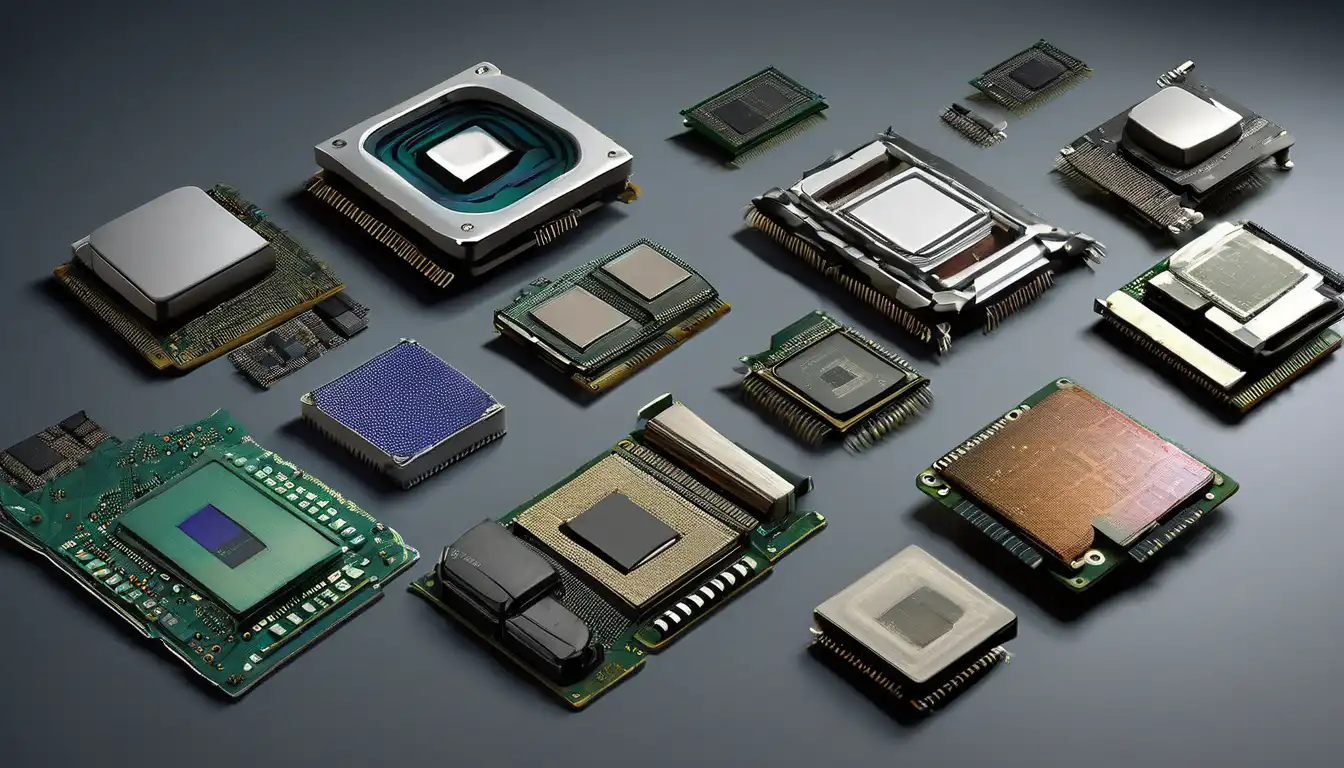

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with massive vacuum tube systems that occupied entire rooms, processors have transformed into microscopic marvels containing billions of transistors. This progression has followed Moore's Law, which predicted the doubling of transistor density approximately every two years, a prediction that has largely held true for decades.

Early computing devices like the ENIAC (Electronic Numerical Integrator and Computer), developed in 1946, used approximately 18,000 vacuum tubes and consumed enough electricity to power a small neighborhood. These first-generation processors operated at speeds measured in kilohertz and required constant maintenance due to tube failures. The transition to transistor-based processors in the late 1950s marked the first major evolutionary leap, making computers more reliable, smaller, and more energy-efficient.

The Integrated Circuit Revolution

The invention of the integrated circuit (IC) in 1958 by Jack Kilby and Robert Noyce revolutionized processor development. This breakthrough allowed multiple transistors to be fabricated on a single silicon chip, paving the way for the microprocessors we know today. The first commercially available microprocessor, the Intel 4004 released in 1971, contained 2,300 transistors and operated at 740 kHz. This 4-bit processor demonstrated the potential of putting an entire central processing unit on a single chip.

Throughout the 1970s and 1980s, processor evolution accelerated rapidly. The 8-bit era saw processors like the MOS Technology 6502 and Zilog Z80 powering early personal computers and gaming systems. These processors, while primitive by today's standards, established the foundation for modern computing architecture and instruction sets that would influence designs for decades to come.

The Personal Computing Revolution

The 1980s marked the beginning of the personal computing era, driven by processors that brought computing power to homes and offices. Intel's x86 architecture, starting with the 8086 and 8088 processors, became the dominant standard for IBM PC-compatible computers. The transition to 16-bit and later 32-bit processing enabled more complex software and graphical user interfaces.

Competition intensified during this period with companies like AMD emerging as significant players in the processor market. The clock speed wars of the 1990s saw processor frequencies climb from megahertz to gigahertz ranges. However, as physical limitations began to constrain clock speed increases, manufacturers shifted focus to multicore architectures and parallel processing.

The Multicore Era and Beyond

The early 2000s marked a fundamental shift in processor design philosophy. Instead of pursuing ever-higher clock speeds, manufacturers began integrating multiple processor cores on a single chip. This approach allowed for better performance scaling while managing power consumption and heat generation. Today's consumer processors commonly feature 4 to 16 cores, while server processors may contain dozens or even hundreds of cores.

Modern processor evolution has embraced several key technologies including SIMD (Single Instruction, Multiple Data) extensions for multimedia processing, advanced power management features, and heterogeneous computing architectures that combine different types of cores for optimal performance and efficiency. The integration of graphics processing units (GPUs) directly onto processor dies has further blurred the lines between traditional CPU and GPU functionality.

Specialized Processors and Emerging Technologies

Recent years have seen the rise of specialized processors designed for specific workloads. AI accelerators, tensor processing units (TPUs), and neural processing units (NPUs) represent the latest evolution in processor technology, optimized for machine learning and artificial intelligence applications. These specialized chips demonstrate how processor design is becoming increasingly tailored to specific computational tasks.

The ongoing development of quantum computing processors represents perhaps the most radical departure from traditional computing paradigms. While still in early stages, quantum processors operate on fundamentally different principles than classical processors, potentially offering exponential speedups for certain types of calculations. Meanwhile, research into photonic computing, neuromorphic chips, and other alternative computing architectures continues to push the boundaries of what processors can achieve.

Manufacturing Advances and Future Directions

The physical manufacturing of processors has evolved dramatically alongside architectural improvements. The transition from micrometer-scale features to nanometer-scale fabrication has enabled the incredible transistor densities of modern processors. Current state-of-the-art processors are manufactured using processes as small as 3-5 nanometers, approaching physical limits of silicon-based technology.

Looking forward, processor evolution faces several challenges including quantum tunneling effects, heat dissipation limitations, and the increasing complexity of chip design. Potential solutions being explored include 3D chip stacking, new semiconductor materials like gallium nitride and graphene, and continued refinement of existing silicon manufacturing processes. The industry is also investigating ways to improve energy efficiency as computing becomes increasingly pervasive in our daily lives.

The Impact on Society and Technology

The evolution of computer processors has fundamentally transformed nearly every aspect of modern society. From enabling the internet revolution to powering smartphones, medical devices, and scientific research, processors have become the invisible engines driving technological progress. The continuous improvement in processing power has made possible applications that were once considered science fiction, from real-time language translation to autonomous vehicles.

As processor technology continues to evolve, we can expect further integration of computing into everyday objects through the Internet of Things (IoT), more sophisticated artificial intelligence systems, and new computing paradigms that may eventually surpass the limitations of current architectures. The journey from room-sized vacuum tube computers to pocket-sized supercomputers demonstrates both the incredible pace of technological progress and the endless potential for future innovation in processor design and capability.

The history of processor evolution serves as a powerful reminder of how fundamental hardware advancements enable software innovation and create new possibilities across all fields of human endeavor. As we look toward the next generation of computing technology, the lessons learned from decades of processor development will continue to guide engineers and researchers in creating even more powerful and efficient computing systems for the future.